SICP

SICP 2.3.4 Solutions

April 7, 2015 10:28

Ex 2.67

This involves simply demonstrating the result of an already-written operation. There’s nothing to do other than make sure to use the correct arguments for the procedure. Its primary purpose is to be used as a test for the next exercise.

Ex 2.68

There are several ways to tackle the encoding of a symbol. The approach I used is very simple, in that it alternately searches the left and right branches for the symbol. If the symbol is found at a given leaf, that leaf returns a true value (an empty list), otherwise it returns false. That empty list is then built up as a symbol recursively, by prepending a 0 or 1, depending on the branch where it was found.

Determining that a symbol is not in the tree using this approach involves searching the entire tree. This could be improved, however, since the procedure find-symbol can be avoided entirely if some other method reveals the symbol to not be in the tree. For example, a check could be done on the root symbol-list that would determine quickly if the symbol is present or not. This has to be weighed against the fact that the vast majority of the time, the symbols will be in the tree, and in the exceptional error case, the operation cannot continue. More considerations on performance are discussed in Exercise 2.72 below.

Ex 2.69

The key to this exercise is observing that thanks to the ordered set implementation, the two elements with the smallest weight are always the first two elements of the set. This lends itself to a brief iterative function — create a new node from the first two elements of the set, add that as an element to the remainder of the set, and repeat until there are fewer than two elements. In the end the remaining element of the set is the fully merged set.

Ex 2.70

Most of this exercise involves just making sure you understand how to use the encoding and decoding procedures. The last part is a demonstration of how Huffman coding improves the efficiency of the encoded message. The number of bits for the Huffman coding is determined empirically, by simply encoding the message. To determine how many bits a fixed length encoding would require, we determine how many bits are needed for all symbols to have a unique encoding. This is the smallest power of 2 that is equal to or greater than the number of symbols. The eight symbols of the doo-wop ‘alphabet’ can therefore be mapped to 3-bit encodings. Since each encoded symbol is of fixed size, the total number of bits is then the size of one encoded symbol multiplied by the message length.

Ex 2.71

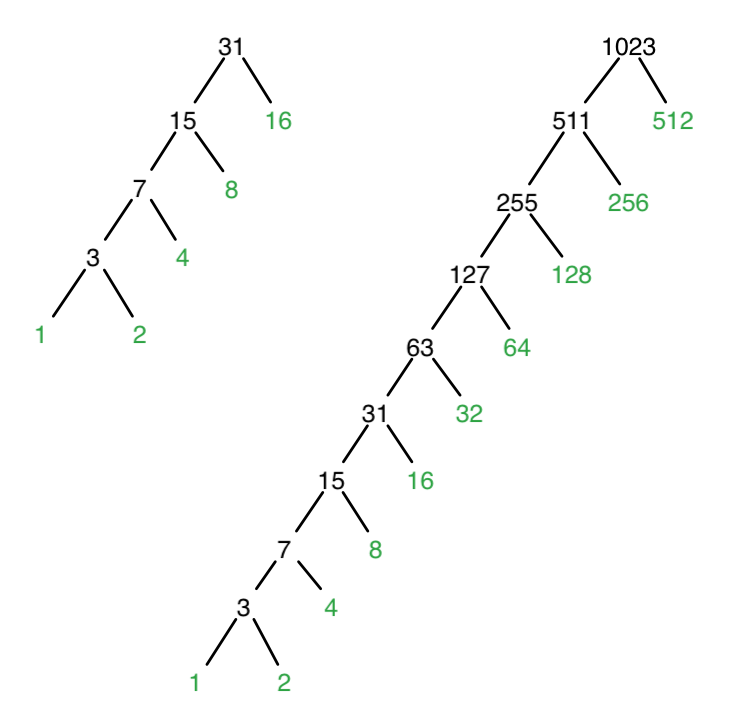

I don’t find the pattern to be particularly difficult to observe. With the frequencies as successive powers of 2, all symbols of a lower frequency will be placed in the tree prior to the next highest symbol being put in the tree. The result is a heavily skewed tree, with every branch having just one leaf on the right, and the entire rest of the tree on the left.

This diagram shows the weights of each node, with the leaves marked in green.

It’s pretty easy to see that the most frequently used symbol is at the top, and encoded with a single bit. The most infrequent symbol will be at the deepest leaf of the tree. Every branch except the very last one has just one symbol encoded at that depth, meaning that the tree is n – 1 levels deep. That will be the length of the most infrequent symbol, because each level of the tree corresponds to one binary bit.

Ex 2.72

Obviously the answer depends on the approach taken in encode-symbol. The straightforward approach I used may need to check every node of the tree, but it never checks a node more than once. Checking any node takes just a few short steps, no matter the size of the tree. The procedure can never be slower than the worst case in which every node is checked, and the total number of nodes in the tree scales directly with n. This makes it O(n) in the number of steps.

The problem statement, and the description of encoding in the text, suggests an alternate approach, in which the symbol list is examined at each step in order to determine which branch to descend to. Such an approach would avoid having to examine any extra leaves, but also adds the number of steps required to search the symbol list. Searching that list is in general an O(n) operation, and again the worst case involves searching through a number of nodes proportional to n (this is dependent on the tree depth, but Huffman trees are by design unbalanced). So at a rough guess this is potentially an O(n2) operation! In practice, however, the tree is more likely to not be so deep, and the symbol search is probably fairly fast. Moreover, the expected frequencies ought to correspond to the real-world symbols encoded, meaning that a larger proportion of the computation time while encoding will be on the symbols that are not deep in the tree. Very few searches will actually need to examine n nodes. As the text states, giving a general answer here is difficult.

For specific case in Exercise 2.71, I’ll just analyze the simple approach I implemented. As for what a ‘step’ constitutes, let’s consider it any call to find-symbol. There’s nothing more complicated in it than a call to cons, so in practical terms, it’s probably the function call itself that requires the most time. Encoding the least frequent symbol takes n steps. Encoding the most frequent symbol will end up visiting every node, taking 2n – 1 steps.

That is a fairly specific case, of course. Consider that if my approach merely searched the right tree first, it would be much faster for that tree: the most frequent symbol would never take more than two steps to encode. But there isn’t really a good way to figure out the advantage without knowing the tree in advance.